Our Services

Response

Rapidly investigate and contain cyberattacks, reduce ransom demands, and recover systems and data.

Managed

Continuously monitor devices & detect and block new cyber threats.

Advisory

Get ready, stay prepared, and strategically & economically drive down cyber risk.

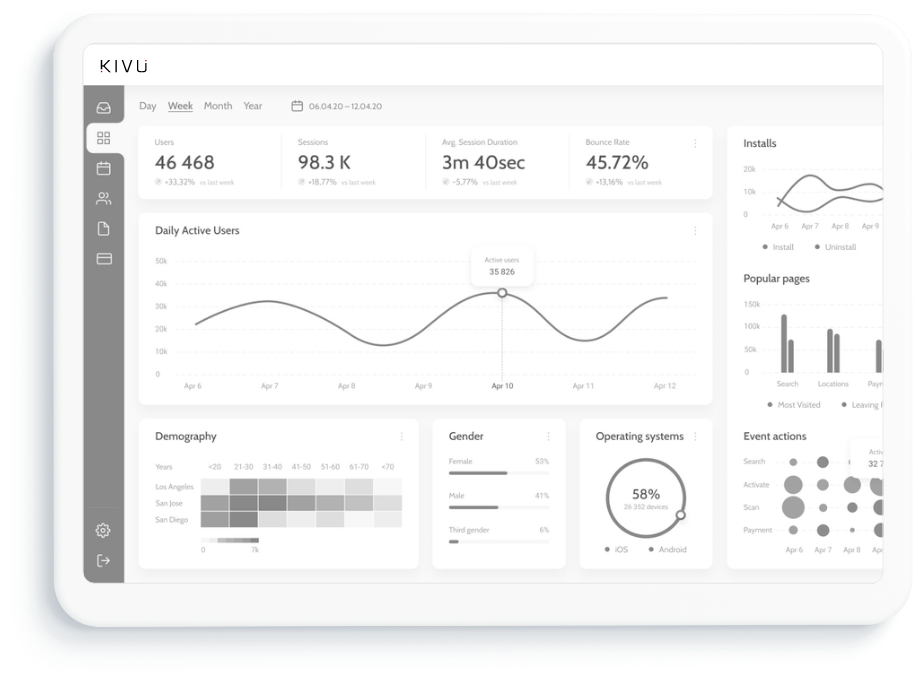

Managed Detection & Response

Resilient organizations will pull through dark times. We’re here to help you with our comprehensive cyber security services.

-

Research

Read Kivu's joint ransomware research paper with Cambridge Centre for Risk Studies.

Learn More